Apono vs StrongDM: Which Privileged Access Solution Delivers Better Developer Experience?

Privileged access solutions are often evaluated on control strength and connectivity. Can they broker access? Can they restrict entry points? Can they capture activity?

Those questions matter. But they overlook something that ultimately determines whether least privilege holds in practice.

Does the system make it easy for engineers to get the right access, at the right time, without friction?

Because if it does not, behavior adapts.

Engineers are problem solvers. When access becomes an obstacle instead of an enabler, they optimize around it. That is where developer experience becomes a security issue.

Where DevX Friction Starts

Engineers usually know what they need to accomplish. The friction begins when translating that goal into the right access request inside a privileged access solution.

In many environments, the privileged access solution becomes another system engineers must navigate rather than an extension of their workflow.

That friction tends to show up in familiar ways:

- Uncertainty about which permission set maps to the task

- Context switching into a separate UI within the privileged access solution

- Delays during debugging or incident response

- Repeating requests because the initial scope was incorrect

Individually, these issues seem minor. Repeated enough times, they shape behavior.

If a privileged access solution makes requesting access uncertain or disruptive, engineers begin asking for broader access up front to avoid getting blocked later. Certainty starts to outweigh precision.

That drift does not begin with weak policy.

It begins with friction inside the privileged access solution itself.

Where StrongDM’s Model Creates Developer Friction

StrongDM is built around proxying and brokering connections to infrastructure. That approach can be effective for controlling entry points into databases, clusters, and servers.

However, its access model relies heavily on predefined access constructs.

Static Definitions in a Dynamic Environment

Permissions and mappings must be created in advance, and engineers must know which access definition applies to their task.

In modern cloud environments, that alignment rarely stays perfect for long.

When definitions are too narrow, engineers get blocked and must submit additional requests. When definitions are broadened to reduce friction, they stop being tightly scoped. The tension between usability and least privilege becomes ongoing rather than occasional.

Over time, predictable patterns emerge:

- Engineers request broader access up front

- Access definitions expand to reduce repeated friction

- Least privilege becomes harder to enforce consistently

Workflow Friction in Privileged Access Solutions

Engineers increasingly expect access to fit into the tools they already use. Slack, CLI, internal developer portals, and AI-driven interfaces are now part of daily engineering life. A modern privileged access solution should integrate directly into those workflows — not sit outside of them.

In many StrongDM deployments, Slack-based workflows are not universally available and are typically tied to enterprise-tier plans. For many teams, the privileged access solution still requires stepping into a separate interface to request access.

During routine work, that extra step may be manageable. During production incidents, it becomes disruptive because it forces context switching at exactly the wrong time.

StrongDM also does not provide MCP integrations or AI-assisted request guidance. Engineers are expected to know exactly what resource and permission level they need. The privileged access solution does not assist in translating intent into the right scope.

When engineers are unsure which resource or permission set maps to their task within the privileged access solution, the process turns into trial and error.

They request access, realize it is insufficient, and resubmit.

To avoid repeating that loop, they begin asking for broader access up front, preferring certainty over precision.

Each extra step pulls them further out of their workflow and makes over-requesting feel like the safer option.

Over time, friction inside the privileged access solution shapes behavior. Engineers optimize for speed and predictability instead of least privilege — not because they disregard security, but because the access experience makes precision harder than it needs to be.

Ready to see how a modern privileged access solution compares? Evaluate Apono vs. StrongDM in our side-by-side comparison.

How Apono Aligns Access With Developer Workflows

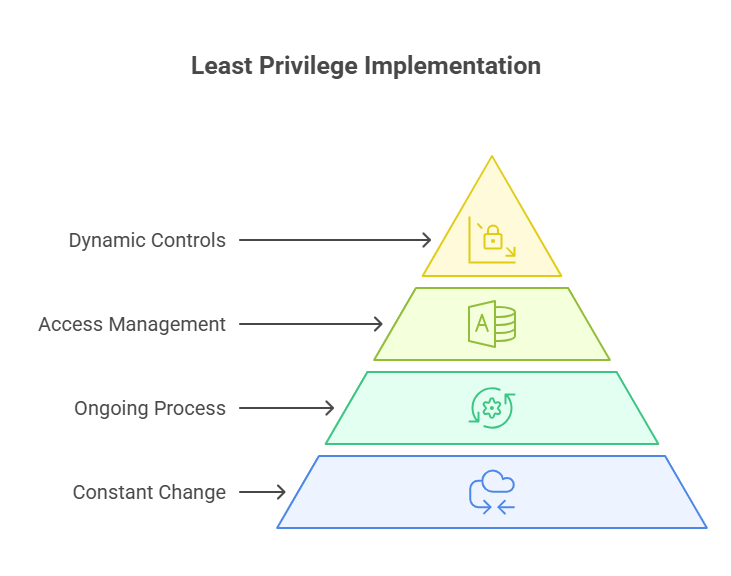

Apono keeps the same foundational principle. Access must be requested. Guardrails must exist. Privileges must expire.

The difference is how the experience is delivered.

Engineers can interact with Apono through Slack, Teams, CLI, Backstage, a dedicated portal, and AI-driven interfaces including MCP integrations. The request process happens inside the workflow instead of outside it.

When engineers are unsure what to request, the system provides guidance. Instead of forcing users to translate intent into a precise permission set under pressure, Apono helps map goals to the appropriate scope.

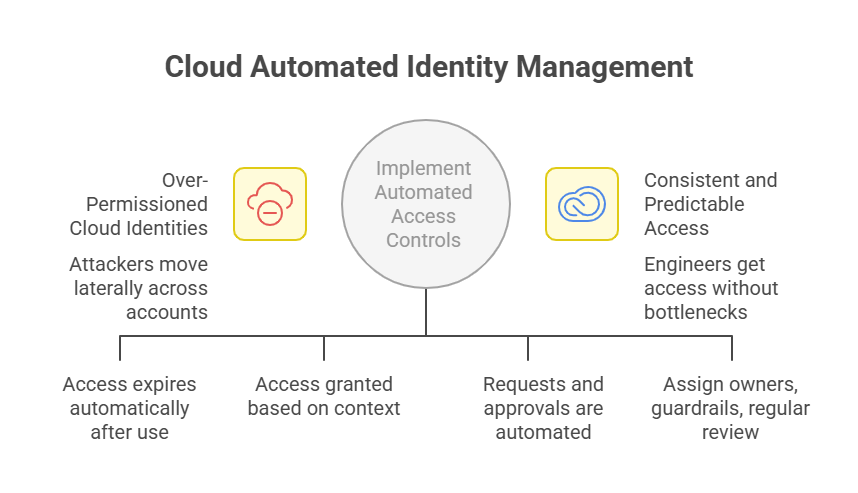

Access is dynamically provisioned at runtime under policy guardrails rather than relying solely on static, pre-created definitions. Privileges align closely with the task at hand and expire automatically when the work is done.

If work runs longer than expected, extensions can occur within policy without forcing engineers to restart the request process. That reduces the incentive to ask for longer or broader access up front.

The result is not a looser system. It is a system engineers are more likely to use correctly.

Make the Secure Path the Fastest Path

At their core, engineers are problem solvers. If getting the right access they need to achieve their goals becomes a problem, then they will find ways around it.

It is up to security teams to put in place the tools and processes that will enable engineers to move faster without compromising on their security priorities. When requests are intuitive, guided, and time-bound by design, least privilege becomes practical instead of aspirational.

Evaluate Your StrongDM Model

If you’re currently using StrongDM, the question isn’t whether it brokers access.

It’s whether it eliminates standing privilege without creating workflow friction.

Book a 30-minute Session to evaluate your privileged access solution:

- Compare static vs. dynamic access models

- Identify areas where standing privilege persists

- Assess whether your current setup supports Zero Standing Privilege

- Explore how modern Cloud PAM integrates directly into engineering workflows

🎁 Qualified StrongDM customers receive a $200 Amazon gift card after completing the session.